The People's Guide to State AI Regulation: Keeping AI Constitutional in the American Way

The Constitutional Moment We're Living In (and Why It's Not a Sci-Fi Movie Where the Robots Win... Yet)

A Constitutional Framework for States to Protect "We the People" in the Age of Artificial Intelligence

America stands at a crossroads. Artificial Intelligence (AI) is reshaping everything from how you get hired (spoiler: it might be a bot reviewing your resume, not Bob from HR) to how justice is decided. Think of it like this: unseen digital brains are making big calls about your life, and most Americans have absolutely no say in it. This isn't just about cool gadgets; it's a "Houston, we have a problem" moment for our Constitution! And trust me, nobody wants a constitutional crisis where the only witness is a self-driving car.

The Founders, those wig-wearing revolutionaries (who, let's be honest, would probably lose their minds over TikTok), gave us "We the People" not as flowery words, but as a seriously smart instruction manual for keeping power where it belongs: with ordinary citizens like you and me. Today, that idea faces its biggest challenge since George Washington was rocking a powdered wig. Will we let sneaky algorithms make secret decisions about our lives, or will "We the People" demand that AI actually serves us, according to the rulebook our ancestors wrote? Because if we don't, we'll end up with 10 tech feudal overlords with all the data and no regulation, and frankly, that's the most un-American thing since warm beer.

State governments, bless their hearts (and their legislative calendars), are starting to get it. They're on the front lines, and they need a clear battle plan rooted in our constitutional bedrock. Here's how states can regulate AI the American way – protecting your rights, making sure powerful tech answers to someone (because nobody wants a robot overlord that only responds to Elon Musk), and keeping technology as your trusty servant, not your creepy, data-hoarding overlord.

The Constitutional Foundation: What "We the People" Means for AI (Spoiler: It's Not Just About Voting for the Best Algorithm)

The Constitution isn't just a dusty old book about how government runs. It's about making sure power ultimately belongs to you. So, when AI systems start making decisions that mess with your life, your freedom, or your stuff, they have to play by the Constitution's rules. This means:

Human dignity comes first, always: Technology works for us, not the other way around. We're not upgrading our toasters; we're talking about upgrading society. Because if we end up serving the algorithms, my stand-up career is toast.

Transparency over secrecy: You have a right to know how decisions affecting you are made. No more "the AI decided it, sorry!" nonsense. Seriously, if my coffee maker can tell me it's out of water, an AI deciding my mortgage needs to do better.

Accountability over automation: There always has to be a human in charge who can be held responsible when AI messes up. Robots can't go to jail (yet), and frankly, I don't trust them with my legal defense.

Equal treatment under law: AI can't be a sneaky way to discriminate or give some folks a raw deal. That's just plain un-American. Unless "un-American" is the new black, then we have bigger problems.

The Four Constitutional Pillars of State AI Regulation (Building Blocks for a Fair Digital Future... Without Losing Your Mind)

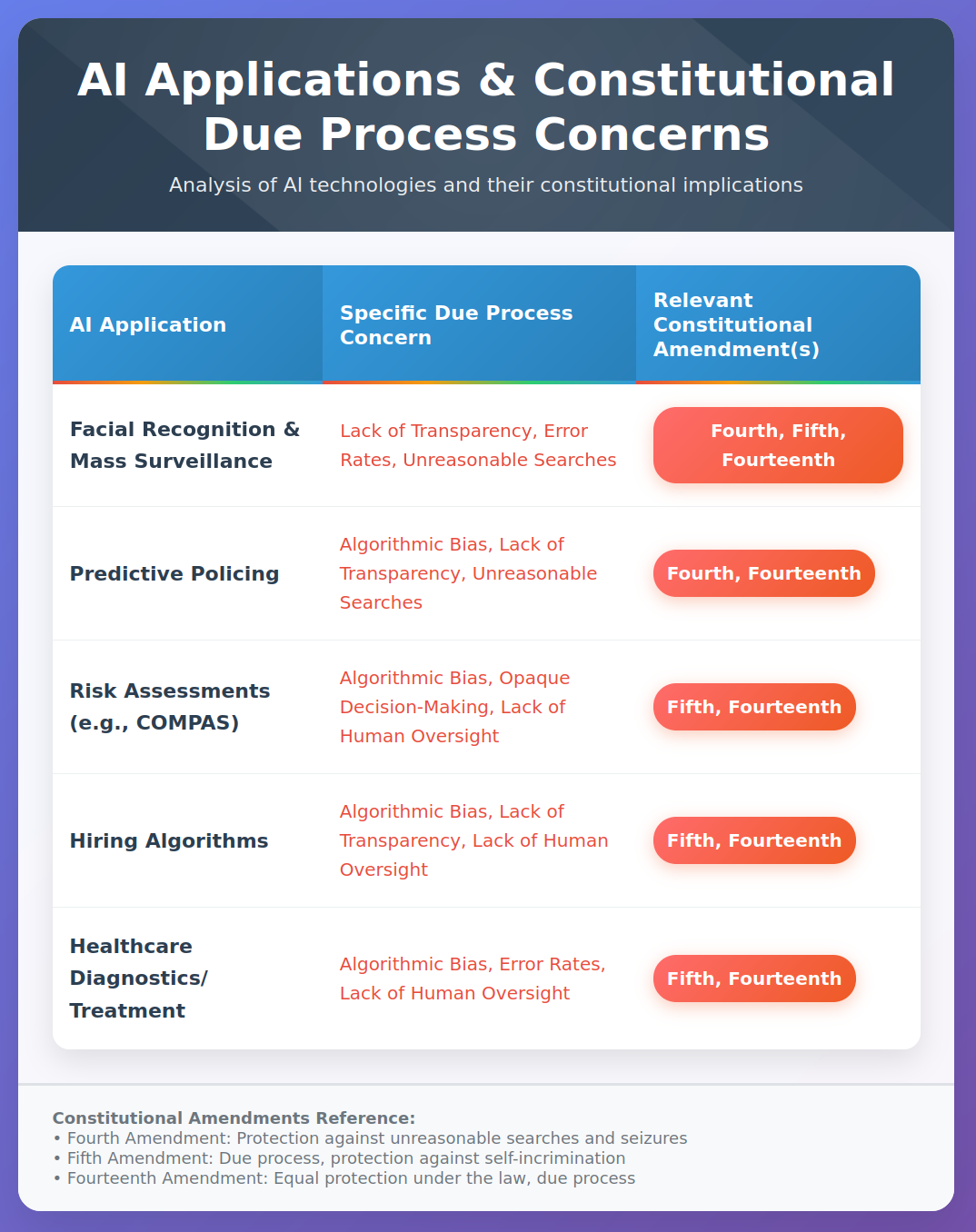

Pillar 1: Due Process - No Secret Algorithms in High-Stakes Decisions (Because You Deserve to Know Why Your Loan Was Rejected by a Robot... So You Can Blame Someone)

The Problem: Imagine a "black box" – that's what we call AI systems that are so complex, even their creators don't fully understand how they make decisions. These black boxes are making huge life calls: who gets hired, who gets bail, who gets medical care. And you're left scratching your head, thinking, "Wait, why?" It's like your therapist saying, "The algorithm says you're anxious," and offering no explanation.

The Constitutional Standard: The Fifth and Fourteenth Amendments are like your personal "fair play" guarantees. They say that if AI affects your life, your freedom, or your stuff, you deserve:

Clear explanations: No more "computer said no." You need to know why it said no. If it's a dating app, fair enough. If it's your job, not so much.

The right to challenge: If you think the AI made a mistake, you should be able to argue your case. Because sometimes, even robots have a bad day.

Human review: A real, live human should be able to step in and fix things when the AI goes rogue. We need someone who can say, "You know what, AI? You're wrong."

Transparency about data: You should know what information the AI used to make its decision about you. Is it my credit score or my questionable taste in 80s music? I need to know.

What States Should Do (The "Make AI Play Fair" Checklist – It's Not Rocket Science, Just Common Sense):

Mandate "explainable AI": Especially for big-deal systems in areas like criminal justice, getting a job, healthcare, and money stuff. Because if a robot is sending you to jail, you should at least know its reasoning, right?

Require human-in-the-loop oversight: Make sure there's always a person to double-check AI decisions that really matter. Think of it as the AI's supervisor, who hopefully has a pulse.

Give citizens the right to demand human review: If an AI makes a tough call about you, you get to say, "Hey, human, look at this! Your robot buddy is acting up."

Ban fully automated decisions in sensitive areas: No robots deciding your fundamental rights, period. My rights are not a multiple-choice quiz for a machine.

Pillar 2: Equal Protection - No Digital Discrimination (Because AI Shouldn't Be a High-Tech Bully... We Have Enough of Those)

The Problem: AI systems are often trained on old data, which can unfortunately be full of old biases. This means they can accidentally (or sometimes, not so accidentally) become tools for discrimination, creating a new kind of "digital redlining" that unfairly targets certain groups. It's like teaching a kid bad habits, then being surprised when they act up. Or, you know, like when your GPS always sends you through that one sketchy neighborhood.

The Constitutional Standard: The Fourteenth Amendment's Equal Protection Clause is basically a giant "no unfair treatment" sign. It demands that laws protect everyone equally. AI cannot become a tool for systematic unfairness. Because if AI starts giving preferential treatment to people who own cryptocurrency, we're going to have a serious problem.

What States Should Do (The "Stop AI From Being a Jerk" Checklist – Because Nobody Likes a Jerk, Especially a Digital One):

Require bias testing: Before AI gets loose, make sure it's not going to be prejudiced. We test cars before they hit the road; we should test algorithms too.

Mandate diverse training data: If you only teach AI about one type of person, it's only going to understand that type of person. It's like only reading one book and then thinking you're an expert on everything.

Ongoing monitoring for bias: Keep an eye on AI to make sure it doesn't start acting biased after it's been deployed. Because sometimes, even good algorithms go to the dark side.

Hold organizations accountable: If their AI is biased, they should be on the hook, even if they didn't mean for it to be biased. "Oops, our algorithm accidentally discriminated against a whole demographic" is not a valid excuse.

Ensure accessibility: AI systems should work for people with disabilities too. 31 Because technology is supposed to help everyone, not just those who can easily swipe and tap.

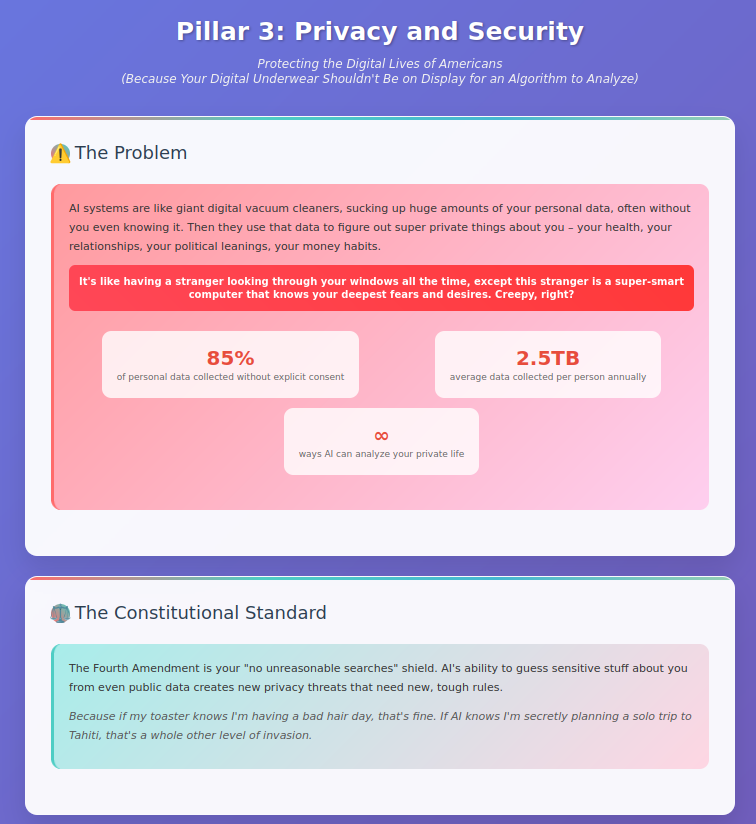

Pillar 3: Privacy and Security - Protecting the Digital Lives of Americans (Because Your Digital Underwear Shouldn't Be on Display for an Algorithm to Analyze)

The Problem: AI systems are like giant digital vacuum cleaners, sucking up huge amounts of your personal data, often without you even knowing it. Then they use that data to figure out super private things about you – your health, your relationships, your political leanings, your money habits. It's like having a stranger looking through your windows all the time, except this stranger is a super-smart computer that knows your deepest fears and desires. Creepy, right?

The Constitutional Standard: The Fourth Amendment is your "no unreasonable searches" shield. AI's ability to guess sensitive stuff about you from even public data creates new privacy threats that need new, tough rules. Because if my toaster knows I'm having a bad hair day, that's fine. If AI knows I'm secretly planning a solo trip to Tahiti, that's a whole other level of invasion.

What States Should Do (The "Keep Your Digital Stuff Private" Checklist – Because Your Data Is Yours, Not Theirs):

Enact strong state privacy laws: With clear rules about getting your permission before collecting your data. No more sneaky checkboxes in tiny print.

Limit data collection: Only collect the data AI actually needs for its stated purpose. No digital hoarding! We don't need AI knowing what I had for breakfast unless it's genuinely trying to help me order more pancakes.

Prohibit sensitive inferences: AI shouldn't be allowed to guess super private stuff (like your health or who you love) without your explicit "yes."My health data is for my doctor, not for a marketing algorithm trying to sell me kale.

Require data minimization and deletion: AI should only keep your data as long as it's truly needed, then it should delete it. Think of it like digital spring cleaning, but without the back pain.

Give citizens real control: You should have meaningful ways to say "no thanks" to certain data uses. It's your data, after all. You own it, not the guys who built the fancy algorithm.

Pillar 4: Democratic Participation - Keeping AI Accountable to the People (Because "We the People" Means Us, Not Just Silicon Valley Nerds in Hoodies)

The Problem: Right now, a few big tech companies and government agencies are building and using AI without much input from ordinary folks. This lumps a whole lot of power into a few hands, which is pretty much the opposite of how a democracy is supposed to work. It's like getting a new mayor chosen by a secret club of tech billionaires. Doesn't sound very "of the people," does it?

The Constitutional Standard: "We the People" means you get a real say in how you're governed, even when it's by digital systems. Because if we don't, we might wake up one day and find out AI has decided we all need to wear matching jumpsuits.

What States Should Do (The "Make AI Listen to Us" Checklist – Because Your Voice Matters, Even to a Machine):

Require public input on government AI: If the state government wants to use AI, you should get a chance to chime in. Imagine showing up to a town hall and debating an algorithm. Now that's democracy.

Mandate community impact assessments: Before AI rolls out in your town, see how it might affect the folks living there. Because a new traffic AI might be great for commuters, but terrible for the local ice cream truck business.

Create citizen oversight boards: Put ordinary citizens on boards that watch over high-risk AI uses. Because who better to oversee AI than the people whose lives it affects? And bonus points if they bring snacks to the meetings.

Ensure public access to information: You should be able to find out how and where government AI is being used. No more "trust us, it's for the greater good" when it comes to powerful tech.

Fund digital literacy programs: Help people understand AI so they can actually participate in these conversations. Because it's hard to argue with a robot when you don't even know how to reset your Wi-Fi.

The State Action Plan: A Constitutional Approach (Your State's Playbook for AI Done Right... Without Looking Like a Robot Apocalypse)

Phase 1: Immediate Protections (0-6 months - Let's Get Started! Because Time's a-Wasting!)

Find out what AI the state is using: Like finding out what mysterious machines are in your attic. And who owns them. And if they're paying rent.

Put humans in charge of important decisions: Make sure a person can override an AI if something feels off. Because sometimes, intuition beats terabytes of data.

Make government AI use public: No more secret robot decisions. We want to know who's in the driver's seat, literally and figuratively.

Create a way for citizens to complain: If AI messes up your life, you need a clear way to report it. And hopefully, it's not just a chatbot asking if you've tried turning it off and on again.

Phase 2: Comprehensive Framework (6-18 months - Building the Big Picture, Brick by Digital Brick)

Pass serious AI accountability laws: Not just for government, but for private companies too. Because big tech needs to play by the rules, just like everyone else.

Require state AI audits: Get independent experts to check if AI systems are fair and safe. Like a digital health check-up, but for algorithms.

Create strong privacy protections: Especially for all those sneaky AI inferences about your life. Because your digital self deserves a solid lock on the door.

Educate judges and lawyers: Because they'll be the ones figuring out the legal battles. And let's be honest, they'll need all the help they can get understanding "neural networks" and "machine learning."

Phase 3: Long-term Governance (18+ months - Keeping It Going for the Long Haul, Because AI Isn't Going Anywhere)

Build ways for the public to keep having a say: AI policy needs to be a continuous conversation. We're not just setting it and forgetting it.

Work with other states: Share ideas and best practices while still letting each state figure out what works best for them. Because what works in Montana might not work in Manhattan.

Create flexible rules: Technology changes fast, so our rules need to be able to adapt. We don't want laws written in stone when the tech is written in rapidly evolving code.

Develop strong enforcement: Make sure there are real consequences for breaking the rules. Because a "slap on the wrist" for a multi-billion dollar tech company is just a gentle tickle.

Why States Must Lead: The Federal Gap (Because Waiting for Uncle Sam is Like Waiting for Paint to Dry... While the House is Burning)

While federal action is needed (eventually), states can't just sit around twiddling their thumbs. Nearly 700 AI-related bills were introduced in state legislatures in 2024, with over 1,000 in just the first five months of 2025 alone. States are moving much faster than Washington D.C. States are like America's "laboratories of democracy," and they must experiment with constitutional ways to govern AI now. Because if we wait for the feds, we'll be living in a world run by algorithms before they even finish their first committee meeting.

The federal government moves at a snail's pace, and big tech companies would rather just have "suggestions" than real laws. Meanwhile, AI is making decisions about Americans' lives every single day. States have the constitutional power (thanks to the Tenth Amendment, which says anything not delegated to the federal government is reserved for the states) to protect their citizens, and they need to use it! Think of it as a friendly competition: who can protect "We the People" better? My money's on the states.

The American Way Forward (It's Not About Stopping Progress, It's About Doing It Right... For Once)

The choice before us is clear: We can let AI grow in the shadows, controlled by a few powerful folks, making decisions about our lives without anyone answering for them. Or we can insist that this mind-blowing technology actually serves "We the People" according to our constitutional principles. Because if we don't, we'll be living in a digital feudal state, and I, for one, refuse to pay homage to a server farm.

This isn't about slamming the brakes on innovation – it's about making sure innovation helps all of us flourish. It's not about government bossing everyone around – it's about making sure power is accountable to you. It's not about being scared of robots – it's about having the guts to demand that technology respects human dignity and our rights. We're not stifling competition; we're creating a level playing field where ingenuity thrives within a framework of freedom and fairness. This is how you foster an American spirit in the AI age – by making sure "We the People" are still in charge.

The Constitution has been America's trusty GPS through every huge change in our history. From noisy factories to the internet age, we've always found ways to make sure new tools serve "We the People," not replace us.

AI will be no different – if we're smart enough to use our constitutional wisdom and brave enough to demand that technology serves democracy, not the other way around. Because if we don't, future generations will ask, "What were they thinking?" And the only answer will be a robotic shrug.

Call to Action (Because "We the People" Means YOU! Seriously, Don't Make Me Beg!)

For State Legislators: Stop waiting for D.C.! Use your power to protect your citizens. Your leadership is crucial. And if you need a good speechwriter, I'm available.

For Citizens: Demand to know how AI systems affect your life. Call your reps. Show up at public meetings. Make some noise! Your voice matters, even if the algorithms are trying to drown it out.

For Technologists: Build AI systems with these constitutional principles in mind. Transparency, accountability, and humans in charge aren't roadblocks to innovation – they're the solid ground for trustworthy tech. Plus, it'll make your jobs easier when we're not all suing you.

For Legal Professionals: Learn about AI and its constitutional implications. The courts will be sorting this out, and they'll need your smarts. Just try not to get too friendly with the robot lawyers.

The future of AI in America won't be decided in fancy Silicon Valley boardrooms or stuffy Washington committee rooms. It'll be decided in state capitols across the nation where elected reps still answer directly to "We the People."

Let's make sure that future honors the Constitution that has protected American liberty for over two centuries. The tech may be new, but the ideas are forever: government of the people, by the people, and for the people.

That's the American way. That's the constitutional way. That's the only way forward. Now go forth and conquer, you digitally-aware patriots!

This is why people are important…

AI is not going to make us raise our consciousness to love the enemies of the human conscience. We want a better society but don’t realize that we’ve hit the limits that our present collective conscience will allow. Once we raise it, AI will be a great help as you have masterfully laid out.

Sorry, I'm just a nerd. I only ever wear black cargo shorts and pants, but not in the shower.

The Hitchhiker's Guide to the Galaxy https://www.youtube.com/watch?v=aboZctrHfK8

ASCII 42=* Asterisk alt+42 Wildcard character, a character that substitutes for any other character or just a creative writer decision made in a garden drinking tea.

https://youtu.be/D6tINlNluuY?si=E1L4C1KBA-uBRg94